- Spark Email For Windows 10

- Adobe Spark Download For Mac

- Download Spark Chat For Mac

- Download Spark For Mac Software

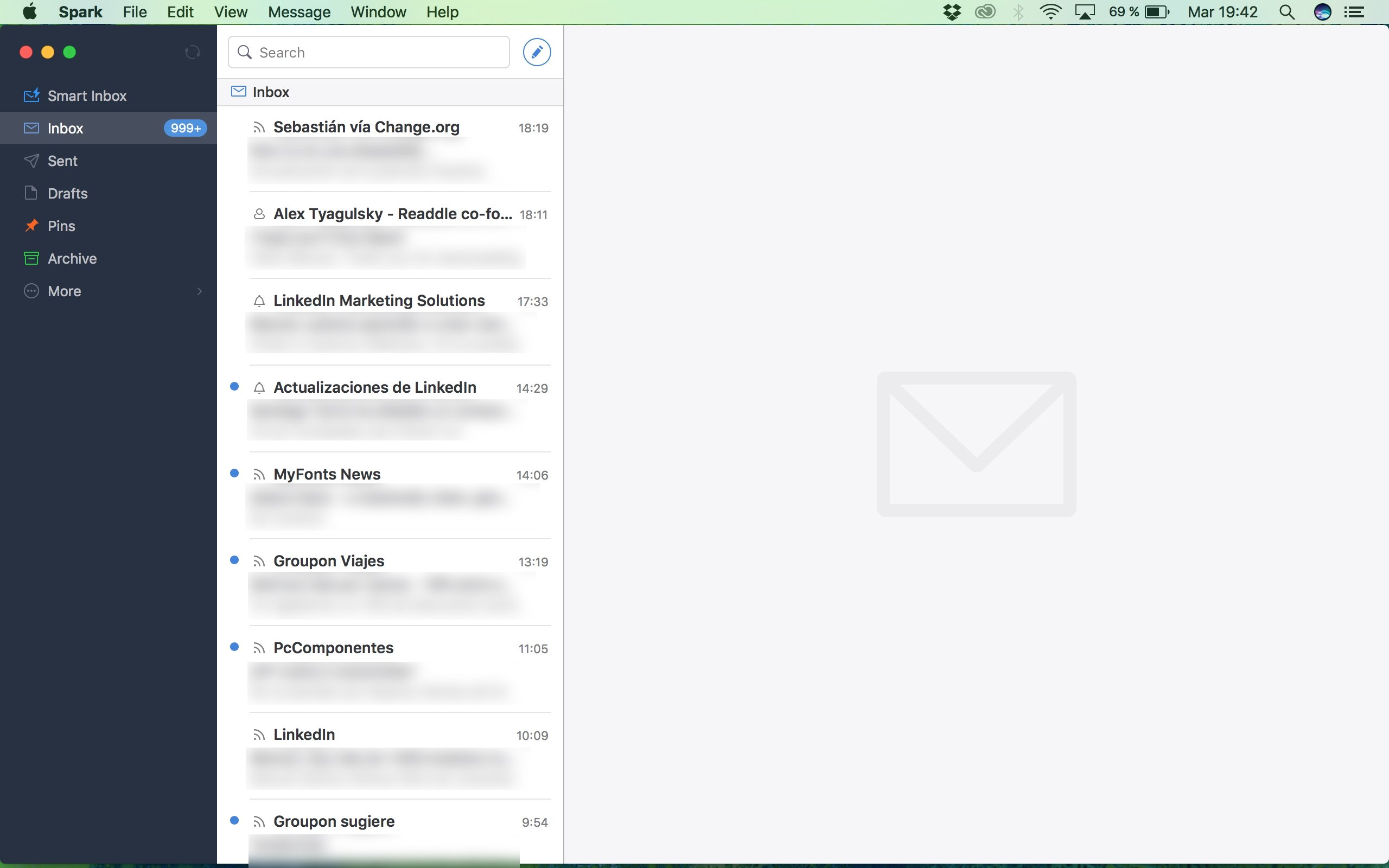

Spark for Teams allows you to create, discuss, and share email with your colleagues. App Store and Mac App Store is a service mark of Apple Inc.

Oct 02, 2018 Download Spark for Mac to take control of your inbox. Download Spark – Email App by Readdle for macOS 10.13 or later and enjoy it on your Mac. Spark is the best personal email client and a revolutionary email for teams. You will love your email again! Download Spark for Mac to take control of your inbox. Adobe Spark for PC DownloadLink: an emulator.

Install Latest Apache Spark on Mac OS. Following is a detailed step by step process to install latest Apache Spark on Mac OS. We shall first install the dependencies: Java and Scala. To install these programming languages and framework, we take help of Homebrew and xcode-select. The fastest and easiest way to connect Tableau to Apache Spark data. Includes comprehensive high-performance data access, real-time integration, extensive metadata discovery, and robust SQL-92 support. Simba’s Apache Spark ODBC and JDBC Drivers efficiently map SQL to Spark SQL by transforming an application’s SQL query into the equivalent form in Spark SQL, enabling direct standard SQL-92 access to Apache Spark distributions. The drivers deliver full SQL application functionality, and real-time analytic and reporting capabilities to users.

Here is an easy Step by Step guide to installing PySpark and Apache Spark on MacOS.

Step 1: Get Homebrew

Homebrew makes installing applications and languages on a Mac OS a lot easier. You can get Homebrew by following the instructions on its website.

In short you can install Homebrew in the terminal using this command:

Step 2: Installing xcode-select

Xcode is a large suite of software development tools and libraries from Apple. In order to install Java, and Spark through the command line we will probably need to install xcode-select.

Use the blow command in your terminal to install Xcode-select: xcode-select –install

You usually get a prompt that looks something like this to go further with installation:

You need to click “install” to go further with the installation.

Step 3: DO NOT use Homebrew to install Java!

The latest version of Java (at time of writing this article), is Java 10. And Apache spark has not officially supported Java 10! Homebrew will install the latest version of Java and that imposes many issues!

To install Java 8, please go to the official website: https://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html

Then From “Java SE Development Kit 8u191” Choose:

Mac OS X x64 245.92 MB jdk-8u191-macosx-x64.dmg

To download Java. Once Java is downloaded please go ahead and install it locally.

Apache Spark For Mac Download Version

Step 3: Use Homebrew to install Apache Spark

To do so, please go to your terminal and type: brew install apache-spark Homebrew will now download and install Apache Spark, it may take some time depending on your internet connection. You can check the version of spark using the below command in your terminal: pyspark –version

You should then see some stuff like below:

Step 4: Install PySpark and FindSpark in Python

To be able to use PyPark locally on your machine you need to install findspark and pyspark

If you use anaconda use the below commands:

Step 5: Your first code in Python

After the installation is completed you can write your first helloworld script:

IntelliJ Scala and Spark Setup Overview

In this tutorial, we’re going to review one way to setup IntelliJ for Scala and Spark development. The IntelliJ Scala combination is the best, free setup for Scala and Spark development. And I have nothing against ScalaIDE (Eclipse for Scala) or using editors such as Sublime. I switched from Eclipse years ago and haven’t looked back. I’ve also sincerely tried to follow the Pragmatic Programmer suggestion of using one editor (IDE), but I keep coming back to IntelliJ when doing JVM-based development.

But, you probably don’t really care about all my history, though. Let’s get back to you. You’re here to setup IntelliJ with Scala and hopefully use it with Spark, right?

In this tutorial, we’re going to try to go fast with lots of screenshots. If you have questions or comments on how to improve, let me know.

After you complete this Spark with IntelliJ tutorial, I know you’ll find the Spark Debug in IntelliJ tutorial helpful as well.

Assumptions

I’m going to make assumptions about you in this post.

- You are not a newbie to programming and computers. You know how to download and install software.

- You might need to update these instructions for your environment. YMMV. I’m not going to cover every nuance between Linux, OS X and Windows. And no, I’m not going to cover SunOS vs Solaris for you old timers like me.

- You will speak up if you have questions or suggestions on how to improve. There should be a comments section at the bottom of this post.

- You’re a fairly nice person who appreciates a variety of joke formats now and again.

If you have any issues or concerns with these assumptions, please leave now. It will be better for both of us.

Prerequisites (AKA: Requirements for your environment)

- Java 8 installed

- IntelliJ Community Edition Downloaded https://www.jetbrains.com/idea/download/ and extracted (unzipped, untarred, exploded, whatever you call it.)

Configuration Steps (AKA: Ándale arriba arriba)

- Start IntelliJ for first time

- Install Scala plugin

- Create New Project for Scala Spark development

- Scala Smoketest. Create and run Scala HelloMundo program

- Scala Spark Smoketest. Create and run a Scala Spark program

- Eat, drink and be merry

Ok, let’s go.

1. Start IntelliJ for first time

Is this your first time running IntelliJ? If so, start here. Otherwise, move to #2.

Spark Email For Windows 10

When you start IntelliJ for the first time, it will guide you through a series of screens similar to the following.

At one point, you will be asked if you would like to install the Scala plugin from “Featured” plugins screen such as this:

Do that. Click Install to install the Scala plugin.

2. Install Scala plugin

If this is not the first time, you’ve launched IntelliJ and you do not have the Scala plugin installed, then stay here. To install the Scala plugin, here’s a screencast how to do it from a Mac. (Note: I already have it installed, so you need to check the box)

Watch this video on YouTube

3. Create New Project for Scala Spark development

Ok, we want to create a super simple project to make sure we are on the right course. Here’s a screencast of me being on the correct course for Scala Intellij projects

4. Create and Run Scala HelloMundo program

Well, nothing to see here. Take a break if you want. We are halfway home. See the screencast in the previous step. That’s it, because we ran the HelloMundo code in that screencast already.

5. Create and Run Scala Spark program

Let’s create another Scala object and add some Spark API calls to it. Again, let’s make this as simple (AKA: KISS principle) as possible to make sure we are on the correct course. In this step, we create a new Scala object and import Spark jars as library dependencies in IntelliJ. Everything doesn’t run perfectly, so watch how to address it in the video. Oooh, we’re talking bigtime drama here people. Hold on.

Here’s a screencast

Watch this video on YouTube

Did I surprise with the Scala 2.11 vs. Scala 2.10 snafu? I don’t mean to mess with you. Just trying to keep it interesting. Check out the other Spark tutorials on this site or Spark with Scala course on where I deal with this fairly common scenario in much more detail. This is a post about Intellij Scala and Spark.

Notice how I’m showing that I have a Standalone Spark cluster running. You need to have one running in order for this Spark Scala example to run correctly. See Standalone Spark cluster if need some help with this setup.

Apache Spark For Mac Downloads

Adobe Spark Download For Mac

Download Apache Mac

Code for the Scala Spark program

How To Install Apache Spark

6. Conclusion (AKA: eat, drink and be merry)

You’re now set. Next step for you might be adding SBT into the mix. But, for now, let’s just enjoy this moment. You just completed Spark with Scala in IntelliJ.

If you have suggestions on how to improve this tutorial or any other feedback or ideas, let me know in the comments below.

Scala with IntelliJ Additional Resources

Download Spark Chat For Mac

As mentioned above, don’t forget about the next tutorial How to Debug Scala Spark in IntelliJ.

Download Spark For Mac Software

For those looking for even more Scala with IntelliJ, you might find the Just Enough Scala for Apache Spark course valuable.